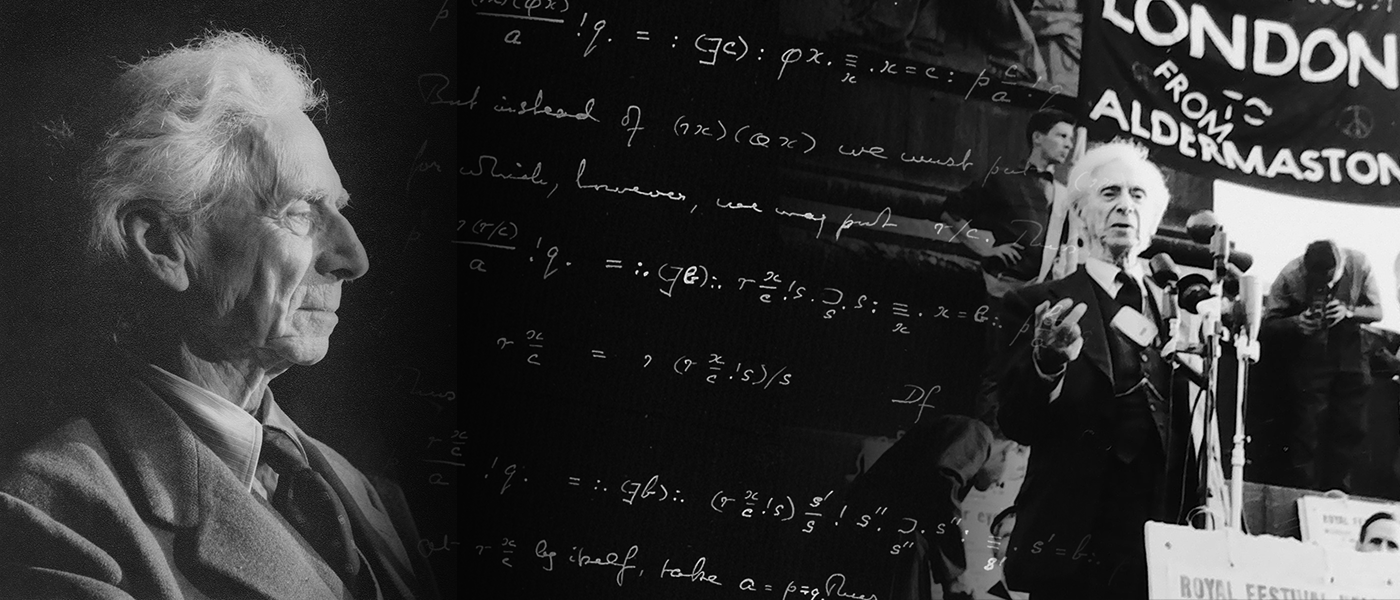

Welcome to the Bertrand Russell Research Centre at McMaster University

We are the world’s foremost institute conducting and promoting scholarship in Russell studies.

McMaster is also home to the Bertrand Russell Archives, the largest collection of Russell material in the world.

The Archives are maintained and curated by the University Library.

Learn More

McMaster logo

McMaster logo

McMaster logo

McMaster logo